Artificial Intelligence (AI) is now considered to be the most likely reason to be the end of humanity according to a number of thought leaders and scientific experts. In an open letter to the Future of Life Institute, signed by such men as Stuart Russell, Stephen Hawking, and Elon Musk – these men have recommended that we move forward with AI ‘very cautiously’. Why? Well, Hawking suggested that any advanced form of AI is what we should call an ‘existential risk.’ Or what he has otherwise said, “Could spell the end of the human race.” How might such an end manifest itself? Anything from extreme unemployment to a looming mushroom cloud.

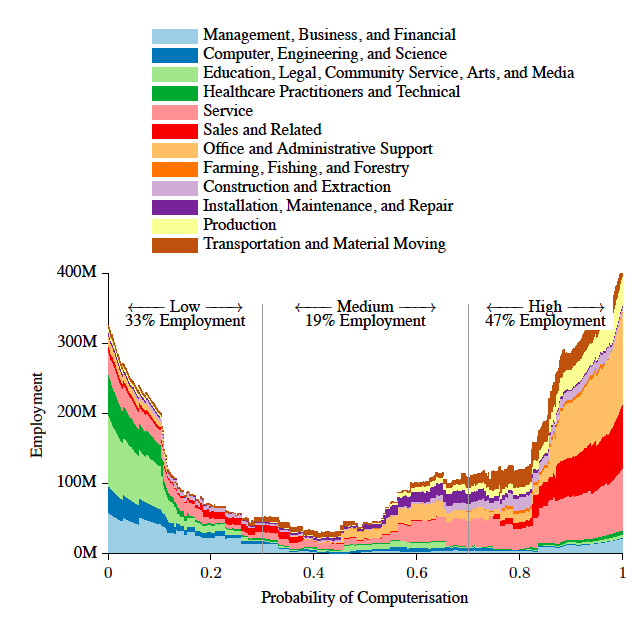

‘The Singularity is Near,’ is something that Ray Kurzweil encourages us to believe, which is a poetic way of describing the blossoming intelligence through the non-biological neural pathways of superintelligent computers. But should we, the human race, celebrate such an idea with the continued belief that we will always maintain our position at the top of the brain chain- even for the next 100 years? Consider the current assembly line robots that are not only stronger and tireless after a 12-hour workday, but also more reliable, expendable… and (occasionally) more cost-effective. How likely do you think it is that your current job will be replaced by a cybernetic machine within the next decade? The Guardian has suggested a mass layoff of about half the world’s population seems reasonable, and The Atlantic put together a chart so we could see just how AI might affect your current job outlook over the next couple of decades.

It has been suggested that by the year 2050, the processing power of a personal computer could match that of the neural capability of 9 million human brains. One might conclude that our feeble human brains will never be a match for the superior processing, planning, deduction, learning, reasoning, manipulation, and problem-solving of AI. And if that is true, perhaps we should all be fearful of AI – very fearful.

Is it possible that one day AIs are going to look back on us the same way that we look at fossil skeletons on the plains of Africa? An upright ape living in dust with crude language and tools, all set for extinction?”

–Ex Machina (2015)

But with this said – not all experts are entirely afraid.

‘Screaming in a vacuum’ is what Dr. Stephen Thaler has been doing for decades, trying to get the necessary support and funding to continue the development of his unique brand of creative Artificial Intelligence (AI) he has named, the “Creativity Machine”. It is this advanced neural system that captures the essence of how ideas and strategies form in the brain.

“I have developed a new brand of machine intelligence capable of human-level creativity and arguably, consciousness itself,” says Dr. Stephen Thaler, President & CEO of Imagination Engines, Inc. “For over 30 years, my activities have included building brilliant control systems for the military, inventing new products and services for industry, discovering new materials, synthesizing novel art forms, and occasional forays into the philosophical repercussions of this contemplative AI.”

Thirty years of research sounds like a strong platform to begin to understand the potential (and danger) of AI.

“After decades of studying these creative neural structures, I have theorized that imaginative neural assemblies within the brain generate what is known as the ‘subjective feel’ of consciousness, by inventing exaggerated significance to the perpetual stream of noise-driven activation patterns (i.e., stream of consciousness) originating within cortex,” explains Dr. Thaler. “Paralleling my previous neural network patents, I am now in the process of building extensive neural systems that similarly deceive themselves into thinking they are conscious.”

In essence, these creations are the type of AI that is to be most feared, embodying free will and the capacity to depart from any cumulative human mentorship to achieve their own self-conceived goals.”

But… Should We Actually Fear AI?

“Of course, we should fear the development of hyper-intelligent machines,” says author, scientist, and artist, Gregory S. Paul. “And we should [also] fear getting up in the morning because who knows what will happen?”

“The one thing we can count on in this era of fast-evolving science and technology is rapid and extreme change, and there is little we can do to control it,” Paul continues. “We, therefore, have to hope for the best – Hopefully, if and when cyber-devices become super smart and self-aware, humans will be able to upload their minds into the new systems and join in on the fun. There are reasons to think it will work out for the best overall. But maybe it won’t.”

However, there is one sentence in the open letter proposed by Hawking and Musk that has become particularly concerning to Dr. Thaler:

Our AI systems must do what we want them to do.”

“From a technical perspective, that statement is especially disturbing, since the most effective systems I build develop their own self-determination and ego – With those capacities come the obsession to solve extremely complex problems, an important indicator of consciousness and intentionality,” explains Dr. Thaler. “In the years to come, it will become irresistible for researchers to build such free will into their own synthetic neural systems – As these computational agents engage in assigned tasks, their human proprietors will always harbor some level of paranoia over their ultimate actions and solutions.”

“You can give AI controls, and it will be under the controls it was given,” Oxford Academic, Dr. Stuart Armstrong explains to The Telegraph. “But these may not be the controls that were meant.”

“If you mean ‘super-intelligent’, then yes, AI systems must be given objectives, and we have known for millennia that it’s hard to specify objectives in such a way that all methods of achieving them result in desirable outcomes,” says Stuart Russell, the Professor of Computer Science and computer science and founder of the Center for Intelligent Systems at the University of California, Berkeley. “Objectives that are not perfectly aligned with those of the human race lead immediately to a form of competition between machines and humans as we try to prevent or undo the undesirable outcomes arising from misalignment of values – This point was made by Wiener in 1960 and remains valid today.”

“I too am guilty of such distrust as I witness my own creative robotic AI autonomously choosing a retrograde strategy that on the surface seems counterproductive,” admits Dr. Thaler. “Upon later reflection, the robot’s behaviors are assessed as pure genius, representing a brilliant solution to the challenge – I believe that such self-determined behavior, and accompanying human angst, is the forerunner of a greater, coming tension between advanced AI and the human race, since such technology will have the capacity to minimize future large scale harm by instigating lesser damage in the near term. A prime example would be a crucial wartime decision by advanced machine intelligence to sacrifice a million lives so that a hundred million may live.”

“Yes, I agree that critical AI systems require validation and verification before being fielded – I have run into that issue repeatedly in automotive problems and flight robotics where human safety is the primary concern,” Dr. Thaler explains. “However, with systems that make themselves arbitrarily complex and opaque, the option of humans probing software for potential pathologies is impractical if not moot. Instead, the most appropriate course of action is to exhaustively test such systems via myriad simulations, preferably administered by a smarter form of AI trying its best to outsmart its “little brother.”

The safeguards should take the form of faith-building exercises for machine intelligence, before it is handed the reins, so to speak.”

The Risks

“As for how to develop artificial consciousness, that is unknowable at this time,” explains Gregory S. Paul. “Trying different approaches and see what works will be necessary.”

“As is the case for many technologies, AI is a double-edged sword that can cut both ways,” reminds Dr. Thaler. “Integral to the world’s ongoing zero-sum game, it will provide new conveniences as well as intensify all human struggles for power and dominance.”

“Effectively, we are delicately balanced on a knife edge and the slightest nudge could lead to our extinction, but probably not through a Terminator-style war of machines against mankind,” muses Dr. Thaler. “For instance, consider these alternative human annihilation scenarios involving machine intelligence:”

-

AI gives us everything we want! As a result it is likely the human race will entertain itself to extinction, unwilling to deal with the challenges of a harsher reality.

-

We combine with AI, taking on a new corporeal look and function, that frees us from our current terrestrial prison.

-

By examining how such systems work we become uninspired, losing faith in ‘natural’ intelligence, and taking either routes 1 or 2.

-

We all meet our demise through Artificial Idiocy (also AI), as weapons or crucial infrastructure control systems catastrophically miscalculate.

-

These systems provide true but implausible advice by virtue of seeing vastly further ahead, in comparison to humanity’s limited foresight. The will of such machines can range anywhere from malign to altruistic if they do in fact embody free will.

-

Special interest groups horde the most powerful AI systems and subjugate the rest of us.

“Mix and match these scenarios as much as you like,” points out Dr. Thaler.

The point is that we can be eradicated in many unanticipated ways by advanced machine intelligence, some of which are not entirely negative.”

Forces Behind the Fear-Mongering

There are many factors at work here, according to Dr. Thaler.

For example:

Uncanny Valley

In the 50s computers weren’t that threatening, since they only carried out ambitious calculations without the need for creativity or consciousness. Entering an “uncanny valley” of sorts, we are now beginning to recognize that cognition and emotion can be replicated in machine intelligence. As a result, many are frightened over the eerie similarity between man and machine and the possibility of a new, human-like competitor on the scene.

Profit Motive

Such paranoia places the spotlight on those doing either AI research or prognosticating on the future of such activities. In effect, we are all made to worry so that a select few may prosper.

Hollywood Influence

Similarly, “Evil AI” makes for a better plot than one in which everyone lives happily ever after following the opening scene. One needs a problem and then a resolution for a quality entertainment experience, and what better dramatic dilemma than a runaway super-intelligence?

A Challenge to World Views

In spite of the abundance of personal electronics and general interest in computing, much of the world still entertain notions that supernatural phenomena, or even exotic-sounding physical processes generate ideas, or that consciousness is limited to neurobiology, the human mind to be exact. So, the controversy is fueling interest as world views collide.

Press Cycle

Personally, I see a trend, in which the same concerns are raised approximately every ten years, as a new generation of journalists becomes hungry for eye-popping tech news. Under pressure to meet their deadlines, they inadvertently ignore what has gone before.

Transference

Truth be known, we are entering very perilous times, where sundry groups have weapons of mass destruction. It seems so convenient that we should manufacture a threat from machine intelligence that replaces our fear of human-style terrorism. This transference parallels the mass paranoia in the 50s over flying saucers that in retrospect diverted our attention from fears of potential nuclear destruction by the Soviets.

Benefits of Allowing AI to Flourish On Its Own

Urbasm: Dr. Thaler, what would you say is the benefit of allowing AI to flourish on its own?

Dr. Thaler: In short, AI will benefit all realms of human endeavor, tirelessly working to automate and optimize everything imaginable, seeing relationships we had no idea existed. As I have repeatedly stated through my writing, the most shock and awe will come when the human race arrives at a fresh worldview based upon what we observe through watching such conscious machine intelligence in action. In other words, such advanced AI will contribute to a new philosophy, and I dare say, a religion, that serves to unite rather than separate the major belief systems.

Urbasm: A religion?

Dr. Thaler: Yes and to illustrate, here are some startling quasi-religious revelations I’ve had myself, watching extensive neural systems build themselves from their basic building blocks to become creative, self-determining entities:

-

A Hereafter – Much to the potential delight of the faithful, I long ago demonstrated that near-death experience is inevitable in any neural system, biological or synthetic, and it is built upon accumulated experience stored within neural nets. As the rate of virtual experiences accelerates, through cascading neural destruction, the brain subjectively feels it is living through an eternity. It is therefore incumbent upon the individual to build a lifetime of compassionate achievement, because that is exactly what is finally “harvested.” Who knows? Such an epiphany may remove doubt about the hereafter and lead to a much more caring and sensitive world.

-

Messianic Machines – Observation of the death of such systems has led to a form of generative AI (US 5,659,666 and its derivatives) that far outshines genetic algorithms and machine learning technologies. Using it we may solve the truly high-dimensional problems (e.g., economic, ecological, social, political issues).

-

Cosmic Consciousness – Other observations of these systems suggest how conscious and creative entities may carve themselves from inorganic matter and energy, making it more plausible than ever that ET or even a cosmic consciousness is out there.

-

Eternal Life – Representing the basic blueprint of consciousness, these creative neural systems are the natural vehicle for human immortality.

-

Humility – Watching these systems self-organize and perform, it has become clear that human cognition, creativity, and consciousness, are all based upon illusion, if not delusion. With that perspective more firmly engrained in our collective psyche, we are less likely to feel that we have a noble duty to colonize the cosmos and spread the human way. The fact of the matter is that we are not the crowning achievement of evolution, but instead the suppliers of entropy to create an even more chaotic universe.

-

Grace – On the other hand, we are like bubbles momentarily popping off the universe, and later reconsolidating with it. In the interim, most of us enjoy a wonderful, fleeting illusion of comfort and bonding.

To some those are threatening words and a signal to eschew this new form of ‘evil AI,’ with the next rendition of the open letter demanding that “good humans” not peer into these neural system to avoid being hypnotized by the devil – To others, it is the hope, contingent on a slight paradigm shift, for a lasting peace among the world’s diverse, and often warring belief systems.”

6 Realistic Fears You Should Have of AI

“The only thing I can say for sure is that the field of AI is seriously heating up and powerful coalitions are forming to exploit such technology for their own gain,” warns Dr. Thaler. “Personally, I don’t foresee doom and gloom, but an unprecedented escalation of human conflict aided and accelerated by machine intelligence.”

Dr. Thaler’s hotlist of things we ‘should be’ wary of:

-

Design of WMDs– Having worked in the nuclear weapons field, I especially fear the use of such machine intelligence to expedite the designing of such devices by terrorists and renegade nations. The same fear applies to biological weapons.

-

The toppling of Economies – Such advanced AI can readily find the trip points in economic systems and readily destroy any prosperity therein.

-

Outlawing Creative AI – Many consider generative forms of AI ‘cheating’ in the production of new intellectual property. As these individuals lobby, it is quite possible that lawmakers may listen, the unfortunate consequence being seizure of intellectual property and incarceration of the AI elite! (Bye)

-

Neo-Luddism – Growing denial that AI can have imagination or consciousness, thus squelching both enthusiasm and funding for building the creative machine intelligence that can cure cancer or bring about world peace.

-

Perception Control – Trust me. It happens! Governments have requested creative AI systems to spin news stories so as to optimize their appeal to other nations or minorities therein.

-

False Prophets – I think the previous point disturbs me the most, since marketing and hype are out of control, especially in the world of advanced machine intelligence. Be on guard, since vast wealth is being dedicated to scaling up 50-year-old AI technology, giving it a soothing voice, and marketing it to both the unwary public and the press! Whereas it is an important data mining tool, it is in no way biomimetic, creative, or remotely conscious.

“But by far, the greatest fear the world should have is the epiphany that human cognition and consciousness is based in illusion and that intelligence, biological or not, is not the most wonderful and profound force in the universe,” Dr. Thaler says with concern.

Just look down to see the dominant life forms, bacteria and swarming micro-organisms – Then look up to see plentiful massive objects governing the cosmos and most likely dictating our fates.”

About Dr. Eric J. Leech

Eric has written for over a decade. Then one day he created Urbasm.com, a site for every guy.